Maximizing HTTP/2 performance with GenStage

A core feature of our Forza Football app is push notifications about live match events. With Apple moving their push notifications services to HTTP/2, we wanted to take advantage of the functionalities that their new API provides and at the same maximize performance and improve resource usage with the new platform.

At the end of 2015, Apple announced an update to APNs (Apple Push Notification service) API. Part of the update was to move communication over HTTP/2 in order to improve performance and be able to deliver instant feedback to clients sending push notifications. A few months ago, since we were rewriting our push notifications system (we wrote about it in the past), we decided to use the new HTTP/2 API so that we would be prepared for the future and the eventual deprecation of the original API.

A (very) quick HTTP/2 primer

HTTP/2 is the new version of HTTP. It brings many improvements while keeping the same semantics of HTTP/1.1. One of these improvements is multiplexing: all communication that happens between client and server through TCP happens on one HTTP/2 connection. However, on one connection there can be multiple streams. A stream is similar to an HTTP/1.1 request but can be initiated - opened, in HTTP/2 lingo - by both server or client. For example, if a browser (client) wants to load a website from a server using HTTP/2, it will first open a connection (which is a direct mapping to a TCP connection) with the server. After that, it will initiate one stream for each resource it needs to load, so for example one stream for the HTML content, one stream per image, one stream for the CSS documents, and one for the JavaScript code. What makes HTTP/2 great is that different streams on a single connection are concurrent: at any given time, many streams can be open on one connection. The exact number of streams that can be open at the same time is negotiated between the client and server during the life of the connection.

HTTP/2 has many more features and improvements over HTTP/1.1, but concurrent streams on a single connection are the one you need to know for the rest of this article. If you want more information about HTTP/2, the HTTP/2 website and the HTTP/2 FAQs are great resources to start with.

HTTP/2 and APNs

The new HTTP/2 API that Apple released uses a single HTTP/2 connection to send multiple push notifications. First, a client (for example, a server where your application lives) opens the HTTP/2 connection with an APN server. This connection can be used to send a (theoretically) unlimited amount of notifications, so it’s advised that you keep this connection open, unless you only send notifications in bursts for only a few times each day. When the client needs to send a notification, it opens a stream on the connection and send a POST request on that stream. The APN server replies on that same stream right away and it closes the stream. This interaction is similar to having one request per notification in HTTP/1.1, but performance is much better because of the single shared underlying connection. When one connection is not enough, Apple advises to simply open many connections and distribute the load of notifications on those.

Maximizing performance

We send millions of notifications each day but they mostly happen in big bursts, for example when a goal is scored in a match between two big teams. Since the notifications we send are time-sensitive, we need to send as many as we can in the smallest possible amount of time.

The first quick-and-dirty approach we took was to simply use one APNs connection to send all of our notifications. We kept this connection open at all times. Problems appeared soon in this prototype implementation: putting the terrible performance aside (caused by having a single sink in the single HTTP/2 connection), we were not handling a fundamental characteristic of HTTP/2, that is, the limit on streams that can be open at the same time (we’ll call this limit max concurrent streams). The current max concurrent streams limit Apple negotiates is 1000 concurrent streams. When sending more than 1000 notifications, say 1001, we would open 1001 streams and if no streams would have freed up by the time we were to open the 1001st stream, we would get an HTTP/2 error from Apple’s server telling us we were opening too many streams.

So, one requirement was to never go over the max concurrent streams limit. At the same time, however, we wanted to maximize the performance of each connection by using it as much as possible. In this case, it means that we should strive to have as many streams open at the same time as we can. Ideally, the number of concurrent open streams should be always close to 1000. We tried to achieve this (and improve the single-connection approach) by using many connections alive at the same time and distributing notifications over these connections. The problem with this approach was that we were distributing notifications to connections in a “fair” way, but connections were processing notifications at different speeds (which can be caused by many reasons, like scheduling, connection usage, and so on). We ended up in a situation where some connections striving to keep up with the notifications to send while other connections would be idle. We traced this down to a design problem: we were using a push pattern where we were pushing notifications to connections without considering the state of each connection. The first solution to this that came to mind was starting to use a pull approach where each connection would express its availability so that all connections would get a fair amount of notifications to send, based on their state and usage.

The more we looked at the problem, the more we recognized a pattern:

-

many events come into our system (the notifications to send to each user)

-

we need to “forward” these events to another system (push the notifications to Apple’s platform)

-

we need to rate-limit these events (keeping the number of open streams under the limit)

-

we need “consumers” (APNS connections) to pull data (pending notifications) based on their availability

These requirements naturally drew us to GenStage, which is an Elixir library that provides abstractions for “stages” (producers and consumers) that exchange data between each other in a rate-limited, pulling-oriented way. We decided we wanted to rewrite the handling of notifications on top of GenStage to be able to take advantage of its features.

Before moving our implementation to GenStage, we felt that none of the existing HTTP/2 clients for Erlang or Elixir would satisfy our performance and reliability requirements. So before doing anything else, we wrote an custom optimized HTTP/2 client to use just in this project.

The current implementation

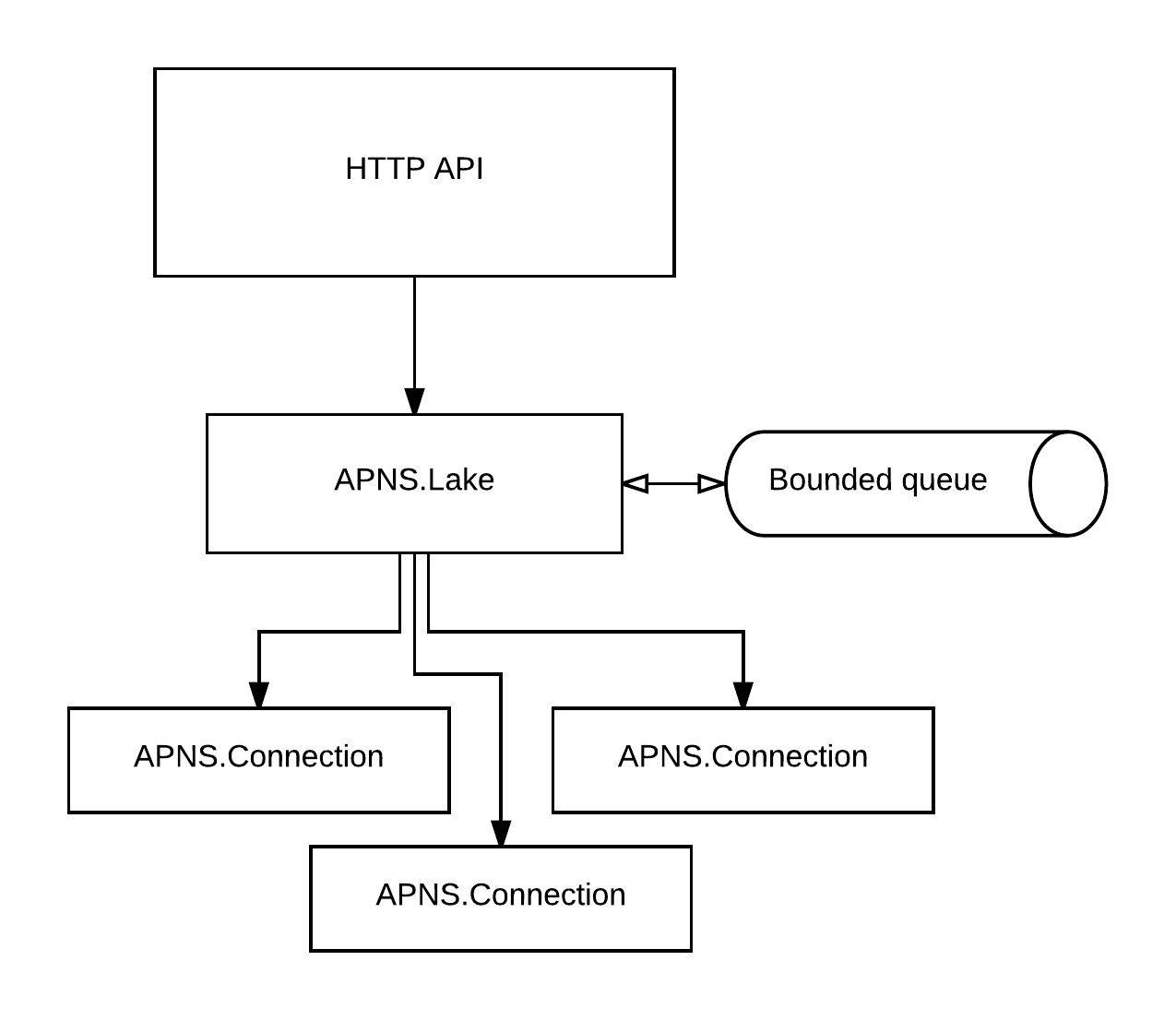

In our current implementation, we have a separate service (an HTTP API) which receives notifications to send to different providers (like APNs for Apple or GCM for Google). This HTTP API keeps several connections to APNs open (around 10) and is responsible for all the rate-limiting of open streams and the spreading of events (notifications) over all the open connections. This service is where GenStage lives. Its architecture looks like this:

The notifications “lake”

Requests that contain multiple notifications to send come into the HTTP API. The first step that we take is to temporarily store these notifications in what we call a “lake” (play on words around a big “pool”). APNS.Lake is a GenStage producer that holds a bounded queue of notifications to send. We use a bounded queue so that we have a back-pressure mechanism if more notifications come in than we can handle. All processes that handle HTTP requests queue notifications in a single APNS.Lake process: while this was thought as a first step before having multiple lakes as well, we found performance was great this way so we are holding off before adding more lake processes.

A simplified version of the APNS.Lake code looks something like this:

defmodule APNS.Lake do

use GenStage

def init(bounded_queue_size) do

state = %{

pending_demand: 0,

queue: BoundedQueue.new(bounded_queue_size),

}

{:producer, state}

end

# This is triggered manually from the HTTP API.

def handle_call({:send_notifs, notifs}, _from, state) do

case BoundedQueue.in_many(state.queue, notifs) do

{:ok, queue} ->

{events, state} = dispatch_demand(queue, state.pending_demand)

{:reply, :ok, events, state}

:full ->

{:reply, {:error, :full}, [], state}

end

end

defp dispatch_demand(state, pending_demand) do

# Dispatches events until either the pending demand

# is satisfied (goes to 0) or we run out of events.

end

end

In the code above, we could let GenStage handle the buffering of events and demand using GenStage’s internal buffer instead of an explicit bounded queue. However, GenStage’s internal buffer drops events when it gets filled up, and we can’t afford to lose events (that is, not send notifications). With a manual implementation through a bounded queue, we have tighter control over the behaviour when the queue becomes full. In our case, we reply {:error, :full} to the HTTP API which can the act accordingly: for example, it can retry later or it can reply with an error to the HTTP client so that that client can retry later (pushing the responsibility of retrying further and further).

The APNS connections

APNS.Lake, the GenStage producer, pushes events to APNs connections (APNS.Connection) processes, which are GenStage consumers. Each connection has a maximum demand of events that is exactly the same as the maximum number of concurrent streams. Conceptually, the logical thing to do would be to initially ask for 1000 events (one notification for each stream) and then to ask for one event each time a stream is closed. In practice, this didn’t work optimally because asking for one event at a time would mean that producer and consumer would exchange many messages, ending up degrading performance. Instead, we buffer demand and make the connection wait until a small number of streams (50 in our case) frees up and ask the producer for this number of events. A consumer never asks for more than max_concurrent_streams - currently_open_streams events, so that the total number of concurrently open streams can’t go over the max concurrent streams limit.

Buffering events also helped us improve the performance of our HTTP/2 client. Each sending of a notification corresponds to a new HTTP/2 request, which boils down to a write on the HTTP/2’s connection TCP socket. This means that by not buffering events we would do many TCP writes close to each other. Now that we buffer events, our HTTP/2 client is designed to send requests issued at the same time as a single blob of bytes down the TCP socket. If we buffer 50 events at a time and send them all at once, we only do one TCP write for all those corresponding HTTP/2 requests.

The work each connection does to process events – that is, send notifications – is asynchronous because it sends a request to Apple and then asynchronously waits for the response. For this reason, we can’t use GenStage’s internal demand mechanism but we have to use the :manual demand mode and send demand to the producer ourselves.

Simplified code for the connections looks like this:

defmodule APNS.Connection do

use GenStage

def init(host_info) do

{:ok, client, mcs} = HTTP2Client.connect(host_info)

state = %{

client: client,

events_to_ask: mcs,

max_concurrent_streams: mcs

}

{:consumer, state, subscribe_to: [APNS.Lake]}

end

def handle_subscribe(:producer, _options, from, state) do

state = maybe_ask_demand(%{state | subscription: from})

{:manual, state} # we set the demand to :manual

end

def handle_events(notifications, _from, state) do

# Work is async so we send notifications without

# sending any demand upstream.

state = send_notifications(notifications, state)

{:noreply, [], state}

end

def handle_info(stream_response, state) do

process_stream_response(stream_response)

events_to_ask = state.events_to_ask + 1

state = maybe_ask_demand(%{state | events_to_ask: events_to_ask})

{:noreply, [], state}

end

def maybe_ask_demand(state) do

if state.events_to_ask >= 50 do

GenStage.ask(state.subscription, state.events_to_ask)

%{state | events_to_ask: 0}

else

state

end

end

end

The number of connections we have today (around 10) was the result of experimenting with the optimal number of connections for a single machine.

Performance and robustness

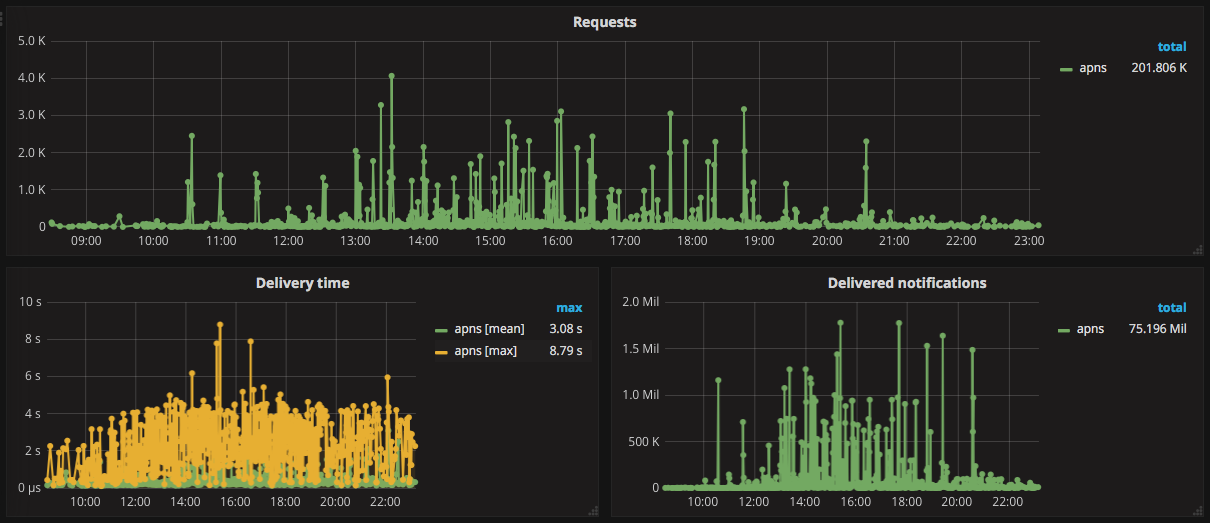

We’re very happy with the performance we’re getting out of this system. The graph below shows how a day with many big matches looks like in our metrics dashboards:

In our system we have two servers handling Apple notifications, and the graph above shows the performance for the two of them combined. At peak times, we are able to send more than 1.3 million notifications in around 10 seconds which is a number we’re satisfied with for the time being.

While we aimed mostly for performance, rearchitecting this system also improved the robustness of our system. Thanks to the work being very distributed over many HTTP/2 connections, the bounded queue that ensures we don’t get overflows, and the isolation that Elixir and the Erlang VM provide, we are confident that the problems that may happen will only affect small parts of the system without significantly degrading the overall performance.

Conclusions and next steps

In this post, we explored how we took advantage of GenStage to build a system around the new HTTP/2 Apple API that is able to reliably deliver millions of push notifications to our users. While we’re happy with the current numbers, we have still improvements that we can make to the system in case we’ll want to squeeze out even more performance. One of them is possible thanks to how we distribute the workload in parallel and over multiple servers (albeit only two for now): this means that the system is scalable, which in turn means that if we throw more servers at it, we’ll be able to increase the throughput of notifications we’re able to send.